Dotnet docker experiences

By Martin on Regards: Infrastructure; Docker; Azure;Transitioning an Application from On-Premises to Azure Docker Containers

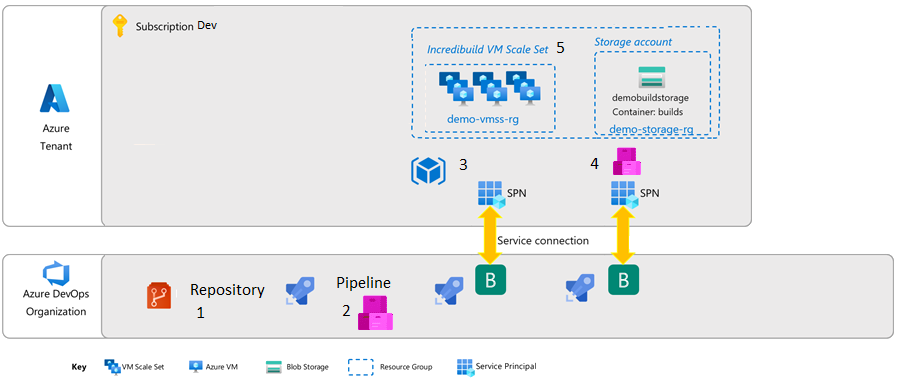

In a previous blog post, I discussed the process of migrating an on-premises application to an Azure Scale Set. Recently, I had again the opportunity to transition an existing .NET background service to Azure using docker. A key distinction between the previously migrated application and the one targeted for this migration was its lack of Windows dependencies. This meant that the application could be feasibly migrated to a Docker container.

Starting with docker containers

Despite my limited experience with Docker containers, I was eager to delve deeper and expand my knowledge. I immersed myself in numerous documentation resources to understand how to create a Docker container using a Dockerfile. Most examples demonstrated the use of a Linux base image and the building of the application using the .NET CLI like the following:

FROM mcr.microsoft.com/dotnet/sdk:8.0 AS build-env

WORKDIR /App

# Copy everything

COPY . ./

# Restore as distinct layers

RUN dotnet restore

# Build and publish a release

RUN dotnet publish -c Release -o out

# Build runtime image

FROM mcr.microsoft.com/dotnet/aspnet:8.0

WORKDIR /App

COPY --from=build-env /App/out .

ENTRYPOINT ["dotnet", "DotNet.Docker.dll"]The private nuget feed problem

The above Dockerfile works great for applications that do not have dependencies on private nuget feeds. However, as you might have anticipated, the application targeted for migration did rely on a private NuGet feed. One potential workaround for this predicament would be to incorporate the credentials for the private NuGet feed directly into the Dockerfile. There are different ways to accomplish this, one of them can be read here Consuming private NuGet feeds from a Dockerfile in a secure and DevOps friendly manner.

While this approach might seem straightforward, it’s not without its drawbacks. Apart from the glaring security implications, the process of making these adjustments can be quite cumbersome.

Fortunately, the .NET CLI comes to the rescue, offering support for

building Docker images with the command

dotnet publish --os linux --arch x64 /t:PublishContainer -c Release.

The Docker image was constructed within a CI/CD pipeline, an environment

already authenticated and granted access to the private NuGet feed. As a

result there was no need for additional configuration.

Build:

[...]

- task: DotNetCoreCLI@2

displayName: 'dotnet restore'

inputs:

command: 'restore'

projects: '*.sln'

feedsToUse: 'config'

nugetConfigPath: '$(Build.SourcesDirectory)/nuget.config'

- task: DotNetCoreCLI@2

displayName: 'dotnet build'

condition: succeeded()

inputs:

command: 'build'

- task: DotNetCoreCLI@2

displayName: 'dotnet publish'

inputs:

command: 'publish'

arguments: '-c Release --os linux --arch x64 /t:PublishContainer /p:ContainerImageTag=latest /p:ContainerRepository=DotNetDockerTemp'

projects: $(Build.SourcesDirectory)/src/**/DotNetDockerTemp.csproj

feedsToUse: 'config'

nugetConfigPath: '$(Build.SourcesDirectory)/nuget.config'

- task: PowerShell@2

inputs:

targetType: 'inline'

script: |

docker save DotNetDockerTemp:latest -o $(Build.ArtifactStagingDirectory)/DotNetDockerTemp.tar

- task: PublishBuildArtifacts@1

[...](1. Code snippet from the build pipeline)

Please notice if you want to create a docker image based on the alpine image, you need to specify the correct runtime identifier (RID) for the alpine image, otherwise you will not be able to start the application. You can find the RIDs here: RID catalog and more about the issue here.

dotnet publish -c Release --runtime=linux-musl-x64 /t:PublishContainer /p:ContainerImageTag=latest /p:ContainerRepository=DotNetDockerTemp /p:ContainerBaseImage=mcr.microsoft.com/dotnet/runtime:8.0-alpine'

Multiple Enviroments and the appsettings.json

You may have noticed in the above build pipeline that we are using

the dotnet publish /t:PublishContainer command. This

command builds and publishes the Docker image to the local Docker

repository. While this is excellent for local development, it raises

questions about handling multiple environments and the appsettings.json

file.

To address this, we save the local published Docker image into the

DotNetDockerTemp.tar file and add it to the artifacts of

the build pipeline (see 1. Code snippet from the build pipeline). During

the release pipeline, we download the artifact and load the Docker image

into the local Docker repository using the

docker load -i DotNetDockerTemp.tar command.

Next, we tackle the appsettings.json file. For this, we

require the FileTransform@1 task, which transforms the

appsettings.json file based on the environment. While we

can’t inject the appsettings.json file into the existing

Docker image DotNetDockerTemp:latest, we can create a new

Docker image based on the existing one.

Release-Dev:

[...]

- task: PowerShell@2

displayName: "Inject" appsettings.json into docker image

inputs:

targetType: "inline"

script: |

docker create --name dockertemp DotNetDockerTemp:latest

docker cp appsettings.json dockertemp:/app/appsettings.json

docker commit dockertemp DotNetDockerFinal:latest

- task: AzureCLI@2

displayName: Azure Container Registry Login

inputs:

azureSubscription:

scriptType: 'pscore'

scriptLocation: 'inlineScript'

inlineScript: |

az acr login --name $(AzureContainerRegistryLoginName)

docker push [...]- Code snippet from the release pipeline

Finally, as a last step, we could push the docker image to the Azure Container Registry.

Custom DNS and SSL Certificates

Upon starting the Azure Container Instance (ACI), I discovered that

the service was unable to access certain endpoints. After conducting

some investigations, connecting to the container instance with

az container exec (or using the azure portal) and probing

with nslookup and related commands, I deduced that the

Docker container instance was incapable of resolving our custom domain

names. This is understandable, as the Docker container instance does not

know our custom DNS server. This issue can be resolved by configuring

the dnsConfig for your ACI.

With the updated settings, the app was able to resolve the endpoint but encountered an exception due to an invalid SSL certificate. Once again, this is logical as the Docker container instance is unaware of our custom SSL certificate. To enable the app or the operating system to accept the custom certificate of our endpoint, we need to add the public key of the certificate to the trusted root certificates of the Docker container instance. I was unable to find a method to accomplish this within the pipeline using the Docker command line. Consequently, I opted to create a custom Docker image based on the existing one and add the public key of the certificate to the trusted root certificates of the Docker container instance.

FROM DotNetDockerTemp:latest AS base

WORKDIR /app

# Second stage: Use the Alpine image

FROM mcr.microsoft.com/dotnet/runtime:8.0-alpine AS final

USER $APP_UID

WORKDIR /app

# Copy files from the first stage

COPY --from=base /app /app

USER root

COPY ["certificate.crt", "/usr/local/share/ca-certificates/"]

RUN apk add --no-cache ca-certificates

RUN update-ca-certificates

USER $APP_UID

ENTRYPOINT ["/app/DotNetDocker"]

CMD ["-s"]This dockerfile use the docker image which was previously created

with the dotnet publish /t:PublishContainer [...] command

and add the public key of the certificate to the trusted root

certificates of the docker container instance. Since the app

DotNetDocker needs to be started with DotNetDocker -s we

need to add the -s to the CMD command. To build the docker

image using the dockerfile we can use the following command:

docker buildx build -f src/Dockerfile . -t localhost:5000/DotNetDockerFinal:latest --no-cache --progress=plain

- -f: Path to the dockerfile

- .: Path to the context; current directory where docker buildx build is executed (important for the COPY command)

- -t: Tag of the docker image

- –no-cache: Do not use cache when building the image

- –progress=plain: Show the progress of the build

Wrapping Up

Transitioning the application into a Docker container proved to be a swift and straightforward process. However, it’s important to note that this journey can entail numerous considerations that may demand a significant investment of time, particularly when the source code of the application is not open to modifications.

I’m eager to hear your thoughts on this process. Would you have approached it differently? Do you have any queries or insights to share? Your feedback is invaluable, and I look forward to our continued discussions on this topic.

Propose a change